/

San Francisco

/

TL;DR

Our minimal “hello, browser” flow (create → connect → visit google.com → release) averages 0.89s on Steel with a p95 of 1.09s (AWS EC2, us-east-1).

Versus other providers we tested, Steel’s end-to-end time is 1.70×–8.95× lower on average, and 1.61×–10.62× lower at p95.

The control‑plane tax (create + release) on Steel is ≈0.229s (≈25.6% of total). Elsewhere it ranges 0.367s → 6.557s (≈21.9% → 82.0% of total).

For AI agents (e.g. browser-use), the create/connect/release flow happens a lot, so shaving seconds here compounds across long runs and many workers.

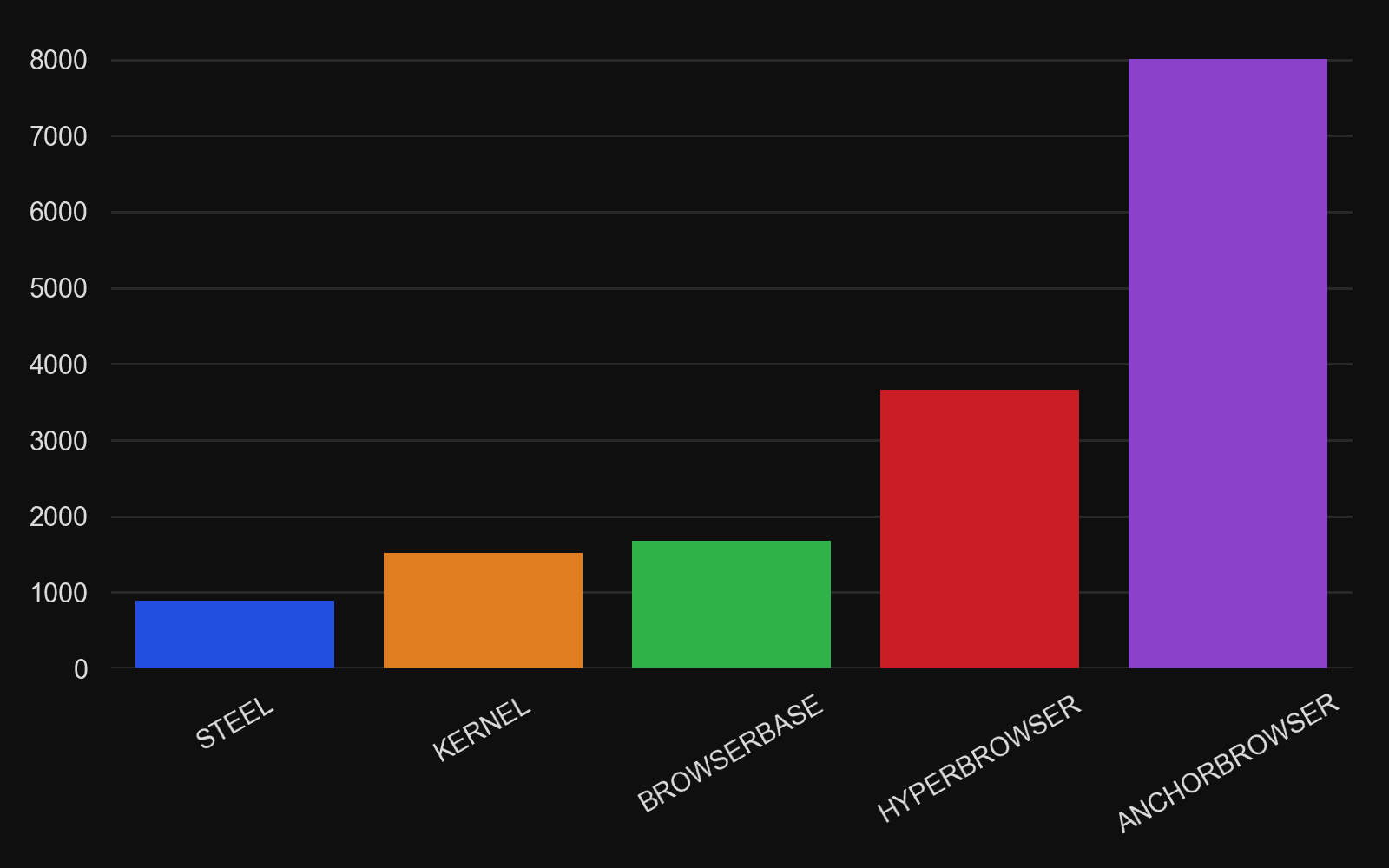

Fig.1. Mean total duration (ms)

Full code & raw results: https://github.com/steel-dev/browserbench

Why this benchmark matters?

Agents don’t just “do work” on pages; they start and stop browsers over and over: new identities, clean slates, anti-bot resets, concurrency fan-out.

If you save ~1s per loop and your agent runs 20 loops, you’ve just won ~20s on a single task. Multiply that across hundreds of workers and thousands of tasks/day and you either delight users or grow a queue.

So we asked a simple question: How fast is “Hello, browser?”, and how much of the time is control plane (session creation/release) vs data plane (connect + navigate)?

What we measured

Provider-agnostic, minimal lifecycle with each vendor’s SDK:

Create a session (cold start / scheduling / identity)

Connect the driver (CDP handshake)

Navigate to

google.com(waits fordomcontentloaded)Release the session (cleanup, quotas, accounting)

Environment: AWS EC2 us-east-1

Runs: 5000 per provider

Steel runner (TypeScript, abbreviated):

We used Playwright over CDP for all vendors and mirrored the same four steps with each provider’s SDK. We did not supply any provider‑specific configuration, sessions ran on each vendor’s default settings

Results at a glance

Total time (create → connect → goto → release)

Provider | Avg (ms) | Median (ms) | p95 (ms) | p99 (ms) |

|---|---|---|---|---|

STEEL | 894.13 | 867.0 | 1,090.0 | 1,340.05 |

KERNEL | 1,518.53 | 1,485.0 | 1,752.10 | 1,992.04 |

BROWSERBASE | 1,676.76 | 1,669.0 | 1,873.00 | 1,981.03 |

HYPERBROWSER | 3,657.11 | 3,665.5 | 5,338.00 | 6,695.05 |

ANCHORBROWSER | 8,001.29 | 7,919.0 | 11,561.00 | 13,957.14 |

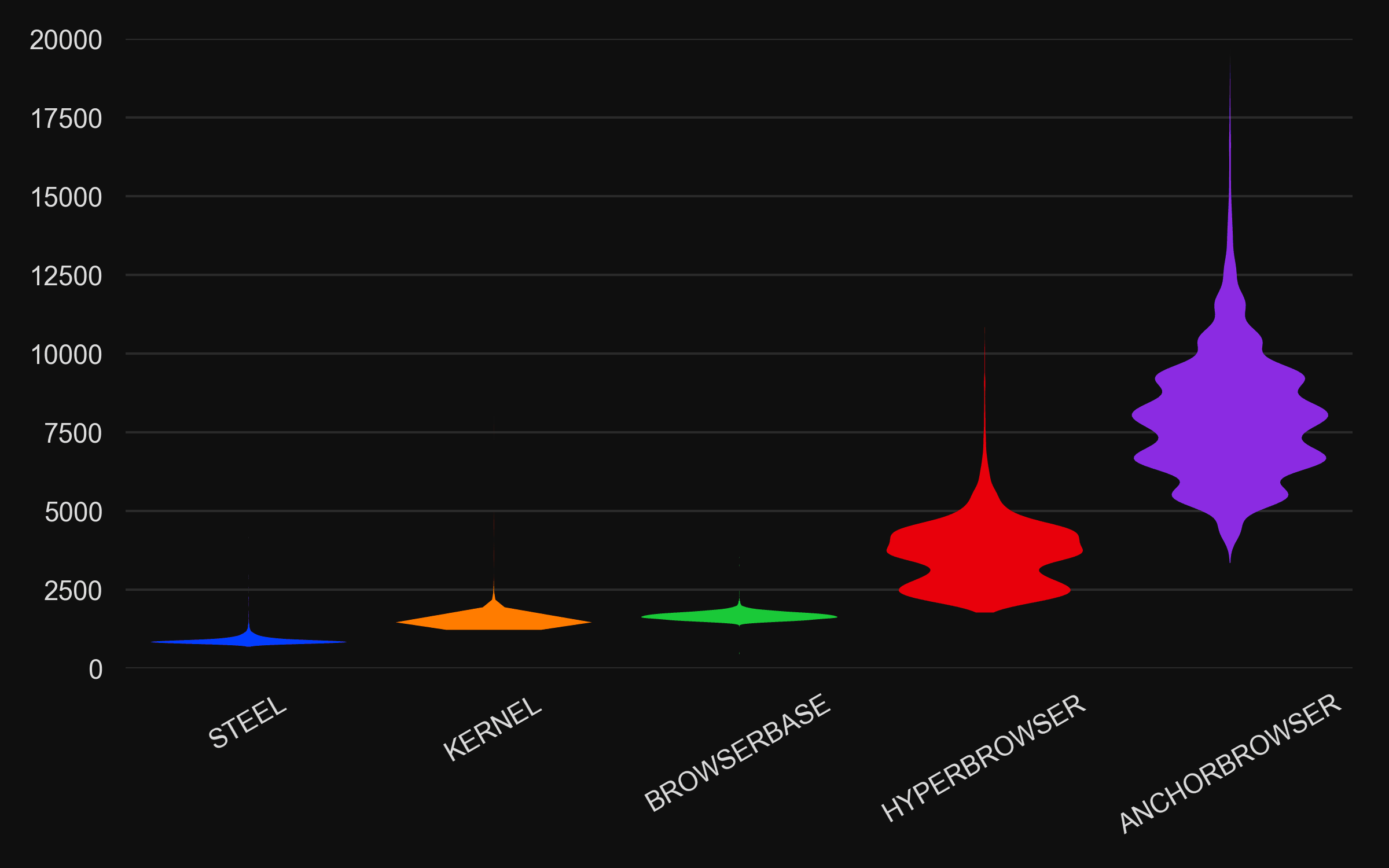

Fig. 2. Total duration distribution (ms)

Steel speedup (avg / p95):

vs Kernel: 1.70× / 1.61×

vs Browserbase: 1.88× / 1.72×

vs Hyperbrowser: 4.09× / 4.90×

vs AnchorBrowser: 8.95× / 10.62×

Time saved for your agents (per 1,000 sessions):

~10.41 min vs Kernel

~13.05 min vs Browserbase

~46.06 min vs Hyperbrowser

~118.46 min vs AnchorBrowser (~1 hr 58 min)

Mind the tails. Some platforms show p99 spikes in the multi‑second range, the outliers users actually feel during bursts and autoscaling.

Where the time goes (stage breakdown)

Provider | Create | Connect | Goto | Release | Create+Release | % of Total |

|---|---|---|---|---|---|---|

STEEL | 181.57 | 174.64 | 490.29 | 47.62 | 229.19 ms | 25.6% |

KERNEL | 261.10 | 428.90 | 533.95 | 294.57 | 555.67 ms | 36.6% |

BROWSERBASE | 188.18 | 517.37 | 792.27 | 178.99 | 367.17 ms | 21.9% |

HYPERBROWSER | 1,731.63 | 347.60 | 377.89 | 1,199.98 | 2,931.61 ms | 80.2% |

ANCHORBROWSER | 3,796.55 | 184.29 | 1,259.66 | 2,760.79 | 6,557.34 ms | 82.0% |

Fig. 3. Control-plane cost (create + release) (ms)

Takeaways

Absolute control-plane cost is smallest on Steel: ~229 ms. That’s 1.6× lower than Browserbase, 2.43× lower than Kernel, 12.8× lower than Hyperbrowser, and 28.6× lower than AnchorBrowser.

Who’s dominated by control plane? Hyperbrowser and AnchorBrowser (≈80% of total). Kernel’s control plane is material (≈37%). Browserbase’s control plane share is small (≈22%), but still ~1.6× Steel in absolute terms.

Data-plane (connect + first goto): Steel = ~665 ms. Kernel ~1.45×, Browserbase ~1.97×, AnchorBrowser 2.17× of Steel. Hyperbrowser’s data-plane is close to Steel (1.09×), but its control-plane tax overwhelms it.

Reliability (5,000 attempts/provider)

Steel: 100% success (0 failures)

Kernel: 100% success (0 failures)

Browserbase: 99.96% success (2 failures)

Hyperbrowser: 100% success (0 failures)

AnchorBrowser: 97.34% success (133 failures)

Most SDKs perform automatic retries on certain errors (e.g., transient network, provisioning). Our “success” counts a run as successful after retries, so these rates may overstate true first-attempt success and understate incident frequency. Inspect per-stage error/retry telemetry in your traces for an accurate picture.

Caveats

This is a cold lifecycle for a single navigation. Real agents do auth, multi‑step forms, file uploads, and more.

Results reflect AWS EC2 us‑east‑1 and google.com as the first page. Region, instance class, network and page choice influence numbers.

Production guidance for agent builders

Amortize the handshake. Batch multiple subtasks per session when safe, reuse sessions for longer flows.

Simplify the surface. Mobile Mode yields smaller DOMs and higher click accuracy for vision agents.

Watch the tails. Track p95/p99 per stage in your traces, optimize for outliers, not just averages.

Prefer headful when flaky. Headful Sessions improve compatibility with sites that resist headless automation.

Debug faster. Agent Logs give you action‑by‑action traces aligned to live view and MP4 replay.

Build with Steel

Humans use Chrome. Agents use Steel.

A headless browser API built for AI products: fast session starts, persistent profiles, stealth & CAPTCHA, live viewers + MP4 replays, and scaling without the DevOps tax.

Docs: https://docs.steel.dev

Dashboard: https://app.steel.dev

Discord: https://discord.gg/steel-dev

Full code & raw results: https://github.com/steel-dev/browserbench

All Systems Operational